Essential Technologies for Aspiring Data Engineers in 2025

Technologies for data engineers

3/25/20252 min read

I know data engineering is a niche field and can seem challenging to break into, especially with today’s tough job market, particularly since many of the technologies needed are paid tools. However, through this article, I hope to offer valuable guidance to aspiring data engineers. I’ll share some of the technologies I’ve personally used, along with industry standards I’ve observed from analyzing the current job market and listings. My goal is to provide you with practical insights that can help you on your journey into data engineering.

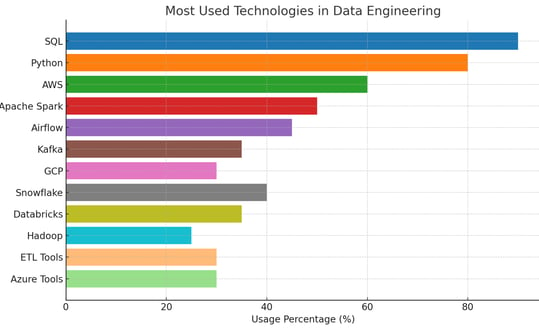

The first thing any data engineer needs to know is SQL. Whether it’s working with technologies like Spark SQL, Microsoft SQL Server, or querying in Databricks or Snowflake, SQL will help you understand data more effectively and is used in about 90% of all data engineering jobs.

When it comes to programming languages, Python is the top language for data engineering and is widely used in the industry about 80% of the time. I use Python for about 80-90% of my work, often combined with Spark SQL for data cleansing and transformation.

Tools such as AWS, Airflow, Kafka, Snowflake, Databricks, and various Azure tools are often company-specific. See the graph above and check the job listings of the companies you are interested in to learn more about these technologies. Personally, I use Spark, Databricks, and Azure tools for my data engineering processes at my company.

Learning a notebook tool is essential for data engineering. The easiest way to start is by using Google Colab or Jupyter Notebooks to get familiar with the basics. In an industry setting, Databricks is one of the most widely used tools, and I highly recommend looking into it.

One of the most important aspects of data engineering is learning how to use and create data pipelines. This can be one of the more tedious parts of being a data engineer. Knowing how to create efficient ETL pipelines can be challenging, depending on the tech stack you are working with, but researching this step and finding resources to learn it can be highly beneficial. There are some YouTube tutorials available to help create data pipelines, but this is something that is often best learned on the job once you get a position.

With that said, I hope I was able to help someone looking to jump into this amazing industry. Feel free to reach out to me if you have any questions during your journey.